AGI is an Engineering Problem

Until this decade, artificial general intelligence was a scientific problem. The main ideas to build it were missing. In 1999, Shane Legg (cofounder of Google DeepMind), predicted we’d build AGI in 2028 based on extrapolations of compute power trends. His prescience on reinforcement learning is remarkable, but the vision was necessarily fuzzy. This is no longer the case. Sam Altman announced recently:

We are now confident we know how to build AGI as we have traditionally understood it...[w]e are beginning to turn our aim beyond that, to superintelligence in the true sense of the word.

Building AGI has become an engineering problem.

The ‘Eye of Sauron’-Theory-of-Research-Progress

When thinking about future AI progress, follow the research priorities of leading AI labs. I often imagine their research focus as “The Eye of Sauron”, the great flaming eye from Lord of the Rings. What The Eye gazes upon becomes the industry's all-consuming focus; what it ignores remains unsolved - not because it's impossible, but because it's not yet time.

Take emotional intelligence. Perhaps the model needs to sound more natural, needs lower latency, needs to reason about the information you give it, textually, from your voice, and from body language. Or perhaps, it just needs to text you first. Increased latency, personality post-training and better UIs gets most of the way there. But The Eye isn’t looking here yet.

For the past few years, The Eye has settled its gaze squarely at scaling training. And instead of trying to fully elicit the capabilities of the model, or fix particular quirks in its behaviour, the attitude has been “just scale”, and it’ll be fixed.

Listen to the labs

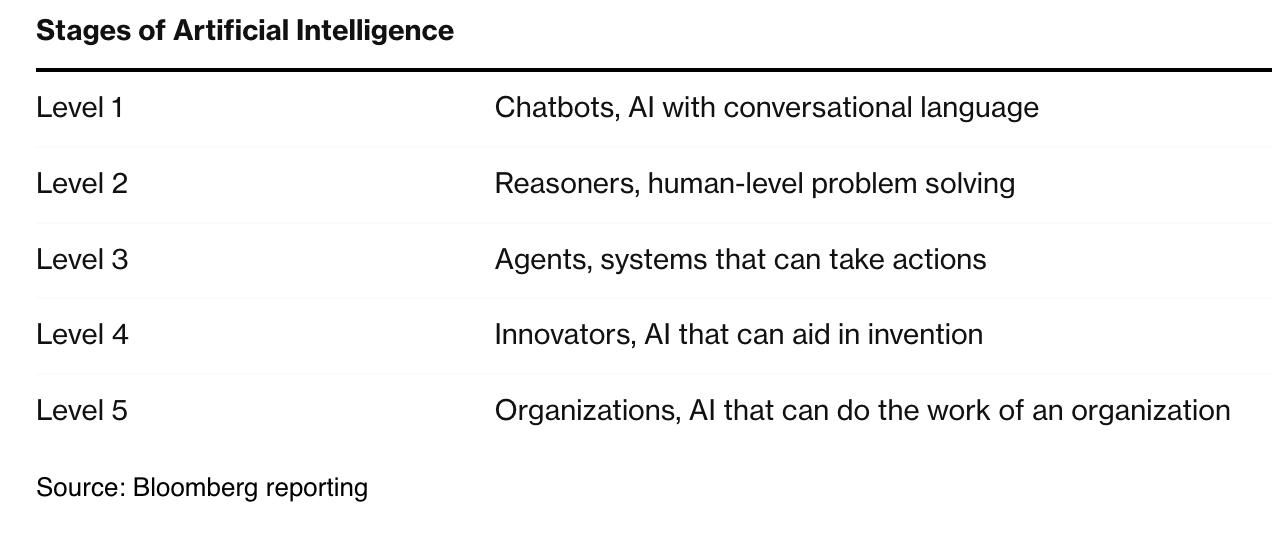

To see where The Eye points next, we don't have to guess - we can listen to what the labs have told us. OpenAI's “five levels of AGI”, first shown in an employee all-hands and later published by Bloomberg, maps out their critical path: first chatbots, then reasoners, next agents; before 'innovators', and finally 'organisations'.

Why these levels? The goal of the AGI companies is to have organisations of automated AI researchers, so that increasing the scale of training runs can be improved at an ever improving rate. The AI researchers would be able to work on any capability that is required to meet any of many definitions of AGI today, and beyond.

Understanding this critical path is useful in two ways:

First, it helps one track the most important events in AI and understand their true significance in solving “what’s left” to create powerful systems. For example, most of Google DeepMind's early work on beating video games like Atari wasn't because they cared about gaming per se, but about solving key problems in planning and goal-directedness (and their recent foundation world model, which can generate interactive environments, are explicitly for training agents). We see this in reasoning capabilities too: After o1’s breakthrough in mathematical reasoning, many dismissed Deepseek's R1 as a mere copy. But Deepseek's earlier papers reveal a different story: the Chinese AI lab had developed expertise in formal verification and Monte-Carlo tree search - key techniques for training reasoning systems - before O1 was published, perhaps indicating a pattern in how reasoning capabilities emerge.

Perhaps most tellingly, while many of OpenAI's best researchers have departed (Ilya Sutskever, Mira Murati, John Schulman, Alec Radford, Barret Zoph, Bob McGrew, Jan Lieke), it hasn’t affected the company’s valuation much—raising $6.6 billion at a $157 billion post-money valuation suggests we're far from the regime where breakthrough insights from star researchers are the limiting factor.

Second, it helps one to ignore weird forms of brittleness in the models, which aren’t going to matter in the end. Mainstream discourse about AI seems bizarrely eager to coalesce around the weaknesses of the models: “LLMs are stochastic parrots”, “the ‘reversal curse’ meant AI was doomed to fail”, “the models can’t count the number of letters in a word”, the models struggle when they are asked to perform tasks ‘backwards’; the models can’t do simple visual reasoning puzzles.

These debates misunderstand the ‘inside view’ from the labs—their sole research focus is the next step on the critical path. Specifically, in the last 3 years, the returns to scaling pre-training have been so high that it was nigh-on unjustifiable to dedicate researcher time and compute to anything that wasn’t a) making this scale more, or b) figuring out the ‘big ideas’ afterwards. Do you really think that AI labs—who have assembled a density of talent in the core research teams, not seen since the Manhattan Project—couldn’t solve the reversal curse if they needed to? The answer is that distracting The Eye of Sauron from the most important problems simply isn’t worth it.

The inverse of this, of course, is that it makes salient which challenges are worth paying attention to – Ilya Sutskever, a leading researcher who is paying attention to the critical path, notes that “pre-training as we know it will unquestionably end”, and, as a result, we'll need to jump to the next paradigm.

Concept, Scale, Apply: The Simplest Story of AI R&D

In the broadest of strokes, there are three stages needed to make progress on each of the five levels to AGI:

Concept: Proving a novel idea works at all, even crudely

Scale: Engineering a proven concept into a deployment-ready system

Apply: Transforming the technology into systems that create real-world value and can be widely deployed

We can track the progress on OpenAI’s levels to AGI so far:

Sometimes the "Concept" stage splits into two phases: first proving a raw concept works at all (Attention, CoT), then developing it into something that advances the critical path to AGI (GPT-2, o1). We can see this pattern emerging with AI Agency.

Agency

The starting shot of this era may have begun with Anthropic's computer use. As Dario Amodei explains in a recent interview, they jury-rigged computer control by training Claude to analyse screenshots and output click locations and keyboard commands, which could be chained together in a loop (show image, get click location, execute, repeat) to enable basic computer interaction across operating systems.

It's remarkable that this works at all — and to be clear, it barely does — because these models were trained for conversation. They lack direct understanding of computer interfaces, struggle with persistent memory across screenshots, and must awkwardly communicate every action in English (“click at coordinates 342, 156”) rather than having native computer control. They’re limited by their context windows, making it hard to handle complex, long-running tasks. This is a scrappily cobbled together agent with existing systems – not one built from the ground up.

But that’s next.

This proof of concept showed how agency can be broken into building blocks: goal consistency— can the agent maintain its objective over time; planning—can the model break down tasks into smaller steps; memory—can the model retain context across long sequences; and tools— can the model interact with a computer and APIs?

The hardest challenge is goal consistency – how do we get the models to maintain their objective?

Goal Consistency: Teaching agents to stay focused

Chatbots have already learned a basic form of goal consistency: maintaining their role as helpful, honest, and harmless assistants throughout a conversation. This ability emerged from training on examples of good behavior (observational learning) and receiving feedback from both human and AI raters (interactive learning). These same principles could extend to maintaining focus on “long-horizon tasks” – where the agent needs to stay aligned with its objectives over extended periods and multiple interactions.

Learning from examples

AI agents start like inexperienced employees — they need to first learn what basic tasks look like before they can work independently. Observational learning provides this foundation by having models observe and copy human demonstrations, like watching recordings of people using computers to complete tasks. This teaches the model valid actions (clicking, typing, navigating interfaces) and basic workflows, just as a new hire might shadow a senior employee to learn the basics of their role.

The internet does not have enough data where people complete a diverse range of tasks on their computer. This will require deliberate data collection and construction. Companies can (and do) hire knowledge workers to record their work as training data, which allows models to learn both the specific tasks being completed and the broader patterns of how humans approach and execute complex workflows. For the less scrupulous, there are many options available. Companies could collect data directly from users, either as a condition of use for their operating system, or without their knowledge (previously, Microsoft’s AI-powered Recall feature took screenshots at regular intervals). Another rich source of training data could come from the vast library of programming tutorials and screen recordings on YouTube, using AI to convert these visual demonstrations into structured sequences of computer actions.

But observational learning alone is insufficient for building capable agents. A model trained only on imitation can repeat patterns it has seen but lacks understanding of goals and struggles with novel situations. Most critically, pure imitation provides no feedback loop - the model has no way to know if its actions succeeded or failed.1 That's why observational learning serves as just the first step, followed by interactive approaches where agents can receive feedback on their actions and learn to achieve objectives rather than simply mimic behaviors.

Interactive Learning: Human, AI and environmental feedback

Just as a new hire moves from watching training videos to working with a mentor, AI agents progress from imitation to receiving direct feedback. This feedback — “reward signals” from the environment or human/AI raters — acts as a carrot and stick, teaching agents which actions help achieve their goals and which don't. While imitation provides the basic playbook, this interactive feedback loop is essential for agents to learn how to stay focused and adapt their approach as tasks evolve.

Inspired by the “think step by step” approach that improved language model reasoning — where models explicitly break down their thinking into smaller logical steps - we can have agents decompose complex tasks into verifiable subtasks. Think of a task like “set up a new web app” — this can be broken into concrete steps: creating directories, initialising repositories, installing dependencies, writing server code, and deploying. At each step, a verification system checks if that specific subtask was completed correctly: did the directory get created with proper permissions? Did the git repo initialize? Did dependencies install without errors?

This granular feedback at each step provides much clearer learning signals than only evaluating the final outcome.

In general, reward signals could come from both monitoring the environment and external feedback. The system can monitor basic system state - checking if files exist, if programs are running, and if there are any error messages. Beyond these basics, task-specific verification is possible through automated testing frameworks checking if code works, static analysis tools evaluating code quality, and performance metrics tracking factors like load times and memory usage.

This internal verification could be supplemented with human feedback. For each task, the system generates multiple different trajectories - different attempts at accomplishing the goal. Human raters can pick which attempts they prefer and give more fine-grained feedback to the models. They consider nuances about how the task was executed, not just whether it was complete: how clean was the code? How efficient was the solution? Was the reasoning logical and simple to follow? And this approach isn't limited to coding — you could imagine designing systems that let users rate how well an agent handled their email or organized their files at each step.

While AI systems can do some of this trajectory labelling too, human raters are (at least currently!) able to provide feedback on higher-level qualities and catch the subtlest errors or misaligned behaviors.2

These verification and feedback systems, while still in early stages, suggest a path to robust goal-following.

Memory: Remembering in ‘neuralese’

Current language models have to process everything through their context window or on an English language scratchpad, which limits their “working memory.” Yet, similarly, the main ideas are in place. In a recent paper, Meta researchers demonstrated how agents could maintain ongoing “thoughts” and “memories” in a compressed neural form (sometimes called “neuralese”). Instead of English-language tokens, it can represent objectives and information in an efficient way native to its own processing. Just as Claude’s computer use demonstrated a proof-of-concept for AI agency, scaling neuralese could enable efficient memory for future AI agents.

Planning: Scale what already works

Similar to goal consistency, we get some amount of planning capability “for free” from existing models. We can improve this through learning from expert examples too—imagine hiring McKinsey consultants to break down projects into actionable steps, or implicitly learning from software engineers. We might even get this capability automatically as a byproduct of teaching models to maintain consistent goals over long horizons—teaching agents to reliably pursue objectives might naturally improve their ability to break down and organize complex tasks.

Tool Use: Building native AI interfaces

Current AI-computer interfaces are inefficient – language models that do use external tools, like ChatGPT with plugins, or Claude Computer Use spit out awkwardly formatted JSON files to call APIs or move mechanically around websites for humans.

The solution is fundamentally an engineering challenge. One perhaps overkill approach would be to encode computer actions directly into the model’s input/output space - rather than inputting and outputting English, the model could output neural patterns that directly map to system operations. But will probably not be necessary – there is lots of low-hanging fruit. We’re still using interfaces designed for humans, instead of the rigid “ask and respond” format, we could develop fluid protocols where models maintain continuous connections with tools, cache key information and share information efficiently.

Putting It Together: Engineering Agents

Somewhere in a data center in Arizona, thousands of AI agents are probably humming away on virtual machines, working through tasks, receiving feedback on their trajectories, and, increasingly, getting better and better. And that’s not to underrate the fiendish engineering challenge. But the main ideas are in place.

We can point to the key components: reinforcement learning for goal consistency, memory architectures that maintain state without token bloat, planning systems that decompose tasks effectively, and tool use interfaces designed natively for AI. None of these requires fundamental breakthroughs - we understand what needs to be built. The next phase is engineering these components to production scale - creating memory systems that work across hour-long tasks and millions of tokens, planning systems that break down complex tasks reliably, and tool interfaces or operating systems optimized for AI interaction.

And such agents, with goal-coherence, memory, planning and tool-use, is the foundation for building AI’s that can make real discoveries.

Invention

To build AGI, AI systems must have some ability to make “conceptual leaps.” I like to conceptualise “invention” as the ability to spot useful similarities between distant ideas from just a few examples.

Creativity is just connecting seemingly disparate ideas and having better taste in which long distance connections might be fruitful.

Consider how a physicist solves a new problem: they might see just a few examples and recognise, “ah, this behaves like that system I studied last year.” They don't need thousands of examples - they can interpolate from a sparse set of observations to grasp the underlying pattern. The more expertise they develop, the better they become at making these leaps with less data.

But this is difficult to get from imitation alone.

The Generator-Verifier Loop: Verifying which ideas work

For Einstein-level conceptual leaps, raw generation capability isn’t enough – current models can already generate generate endless variations on existing ideas. What matters is developing the meta-cognitive ability of recognising which creative leaps are actually valuable.

The key idea is to combine powerful generators with ground-truth verifiers. The generator-verifier loop provides rapid, reliable feedback. Take mathematics: when a model proposes a novel proof, automated theorem provers can immediately verify if it works. This instant feedback helps the model learn which intuitive leaps are actually fruitful.

And, like with “goal consistency” in “Agency”, we can give this feedback step-by-step, rather than a single time at the end. A 2023 paper from OpenAI, “Let’s Verify Step by Step”, shows that providing feedback on each step of a model's reasoning process, rather than just the final answer, dramatically improves performance on mathematical problems. This makes sense – you’ll learn more from a tutor who checks each step of your work, rather than just a right or wrong.

For this to work, verification has to be easier than generation.3 In some domains like math and coding, this is intuitive – where we can check if theorems are valid, or code compiles. But verification doesn’t have to come from external systems. AI models can be their own verifiers.

We already see “verifier” language models that check the reasoning of a “generator’s” reasoning steps. This AI-based verification unlocks new domains: a model trained on scientific papers could learn to evaluate whether a proposed hypothesis is consistent with known evidence, or train itself on journal reviewers comments.

This further implies that as models get better at verification in one domain, they can help train better generators in related domains. The result is a kind of bootstrapping process. Each advance in verification enables better training of creative leaps, which in turn enables more sophisticated verification. Once we solve the core engineering challenges of fast, reliable verification, we could see rapid progress in models' ability to make genuine discoveries.

Synthetic Data: Using AI to generate training data

This verification process doesn't need to happen within a single model or during inference. As we approach the limits of available internet data for pretraining, the future of scaling likely lies in synthetic data generation - using expensive but capable reasoning models to generate high-quality training examples that simpler models can learn from. DeepSeek demonstrated this with r1 and V3: rather than having V3 develop reasoning capabilities from scratch during inference, they used r1’s strong reasoning abilities to generate verified examples for V3's training (similarly, o1 was rumored to be primarily a synthetic data generation model).

This trades expensive inference-time compute for one-time pretraining compute - once a model learns these verified patterns during training, it can apply them quickly during inference. It's a way to front-load the exploration and verification cost rather than paying it repeatedly at runtime.

The Path Forward: The Automated Research Fleet

The last stage of OpenAI’s five levels is to have AIs run organizations – this will require massive coordination. A fully automated AI organization will not have individual AI models in chat windows, or even a single AI agent operating alone on a virtual machine, but instead an automated research fleet: some proving theorems, others reviewing literature, generating hypotheses, running experiments, analyzing results, and developing new paradigms.

But the labs are confident. As Paul Graham reminds us, read the job listings. Three months ago, OpenAI began hiring for a new multiagent research team. Just this week, they've done the same for a new robotics team – the next frontier after software singularity.

We will build AGI before we agree on its definition. For whatever metrics we choose, whatever capabilities we demand, the main ideas are already here – or soon will be, discovered by the automated research fleets that lie at the end of the critical path. The science is done. What remains is engineering.

Acknowledgements: This piece benefited enormously from Jack Wiseman's extensive editing and substantial contributions throughout. Thanks also to Duncan McClements, Thomas Larsen, Eli Lifland, Daniel Kokotajlo, Somsubhro Bagchi, Niki Howe, Ariel Cheng, Nathaniel Li, Andrea Miotti, Samuel Ratnam, Sanskriti Shindadkar, Nat Kozak, Maximilian Nicholson, Xavi Costafreda-Fu, Philip Guo, Jacob Goldman-Wetzler, Jeremy Ornstein, Miles Kodama, Ollie Jaffe, Ananth Vivekanand, Saheb Gulati, Chris Pang, Xi Da, and Sudarshanagopal Kunnavakkam for their thoughtful feedback and suggestions. And thanks to the many others who provided valuable input and discussions.

All mistakes and oversights remain my own.

This does slightly simplify. Offline reinforcement learning learns from datasets of past experiences - each containing states, actions, and their resulting rewards. While this provides a feedback loop through historical data, it's limited to learning only from previously tried approaches. Without being able to actively test new strategies, agents struggle to develop truly robust goal-directed behavior that can handle novel situations. The main point still stands - agents need interactive learning to develop robust goal-directed behavior.

And there are lots of engineering tricks and low-hanging fruit that boost performance. For example, for critical decisions requiring high confidence, rather than always using the same amount of compute, the system can choose to spend extra “inference-time compute” when faced with especially important goal-related choices. The system can delegate simpler tasks to cheaper models while using compute-intensive procedures - like running extensive simulations or generating and evaluating multiple solution attempts - for complex decisions. It could ask you, if it’s unsure. Or try to better elicit the goal that you actually want.

And, in general it is! (In the most general case, P != NP, we hope – h/t Miles K).

In practice, though, there are exceptions. There are important exceptions to the "verification is easier than generation" principle. For instance, in DNA synthesis, verifying if a sequence works requires physically making it in a test tube - a process more costly/bottlenecked than computationally generating candidate sequences (though this could be solved by better simulation models). Similarly, in AI alignment, verifying if a model is genuinely safe and honest can be harder than training it to generate outputs, since you can't trust the model's own explanations (it might be deceptive), can't rely on other AI verifiers (they could also be deceptive), and humans may find it intractable to verify complex reasoning patterns.